Facebook announced that it will be temporarily banning some ads for gun accessories and body armor. It’s not enough

On 16 January, Facebook announced that it will be “banning ads that promote weapon accessories and protective equipment in the US at least through January 22”. To those of us who have been observing the world of Trump-supporting social media, this announcement is a manipulative piece of whitewashing that obscures how Facebook’s algorithms continue to divide people the world over.

As part of my research while working as a consulting producer on Borat Subsequent Moviefilm, I made many pro-Trump social media accounts. The accounts were a window into the Trump echo-chamber, where the unhinged threats and vitriol posted by radicalized users are chilling. Yet as shocking as the posts can be, they make perfect sense if you look at the ads that bombard those accounts.

Roughly four out of five ads shown to my pro-Trump profiles sell tactical gear clearly intended for combat. This is not a new thing – it has been going on since I started looking at these accounts in June 2019, and it was probably going on much longer than that.

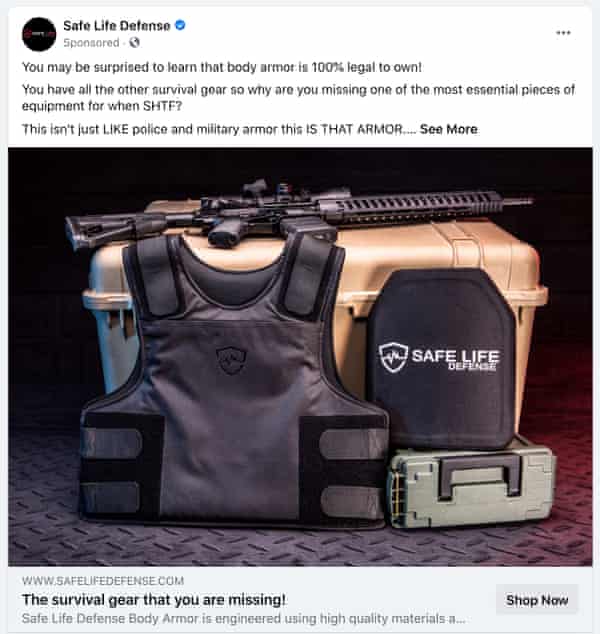

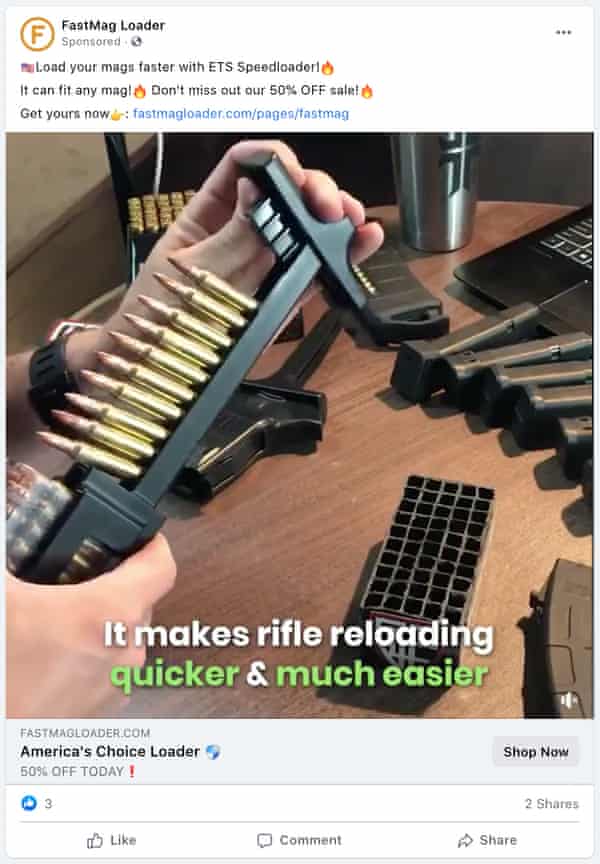

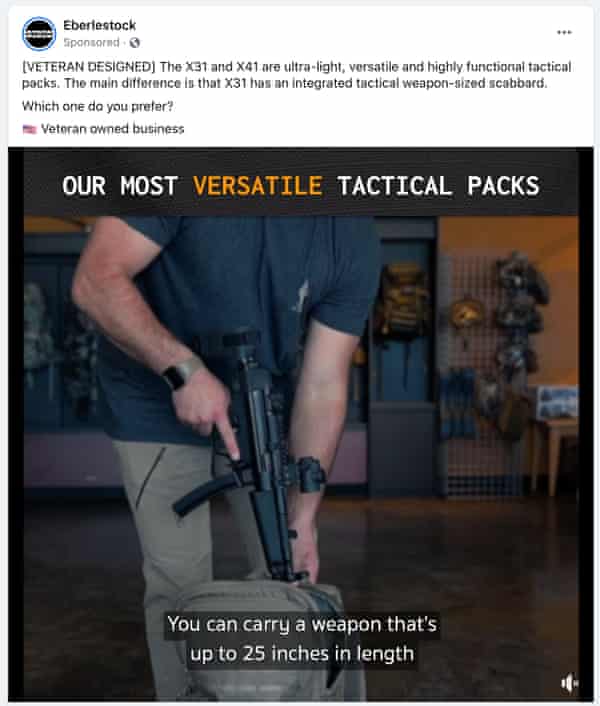

Although Facebook policy does not allow the direct advertisement of guns and bombs, accessories have generally been fair game. Tactical backpacks with integrated back scabbards that can hold weapons up to 25in long. Rapid clip loaders. Night vision sights. Shoulder holsters. Body armor.

Despite not actually selling guns, the vast majority of the ads nevertheless display military-style weapons somewhere in their design. An automatic rifle slides into the tactical backpack. The body armor is worn by someone actively poised to shoot a semi-automatic weapon. A black T-shirt presents an image of a medieval crusader in full armor holding a contemporary handgun, accompanied by a biblical quote: “Blessed be the lord my rock who trains my hands for war and my fingers for battle.”

My pro-Trump feeds are personalized e-commerce sites for Capitol invaders. The rare ads that do not sell tactical gear and accessories for armed combat pitch survival and security products, from enhanced door locks to backup generators. But the fact that you can buy stuff by clicking the links doesn’t even matter. What matters is what being surrounded by those ads does to your mind.

Being on Trump-facing Facebook takes me back to my cold war-era childhood in the early 1980s. I was fascinated by the magazine Soldier of Fortune, which was a window into the shady underworld of romantic mercenary fantasies. I had to wait to read copies my friends acquired. (My father, who survived the Holocaust, disapproved.)

Soldier of Fortune’s pages focused on stories of mercenary life, combat tactics and – most exciting – a classified ads section for weapons and accessories, body armor, mail-order brides, and even hitmen. It was just a magazine, yet it was held responsible in US courts for contract murders and injuries resulting from the magazine’s classifieds.

With some 190,000 subscribers at its peak, Soldier of Fortune was barely in the mainstream. It was not on every magazine stand. You had to go to Soldier of Fortune; it did not come to you. As such, Soldier of Fortune reached and radicalized a relative few.

But today that universe seeks and surrounds you. When you first join Facebook you make a few choices of your own. But soon the algorithm starts narrowing your options and deciding what further choices to present to you. Because many of us rely on a limited number of news sources that populate our social media feeds, our information universe becomes more and more niche. For Trump supporters, that universe is often paramilitary.

According to a 2018 Pew study, seven in 10 US adults are on Facebook, and about half of all Americans check the platform every day. Those who have liked and shared pro-Trump posts, or who have mostly pro-Trump friends, are being bombarded with fear- and aggression-driven advertising warning them to stockpile weapons and accessories. Most of the paths presented lead deeper into the rabbit hole. Few lead out.

The problem we face as a society isn’t just fake news or political advertising. It is the math that drives the whole system. The platforms claim that their advertising algorithms give us what we want. That may be the case with the first few clicks or friends, but very quickly the algorithm is in control. Like the pied piper, it leads us into a land of extremes.

The effect of being trapped is psychologically intense. A few months into maintaining my Trump online identities, I started feeling that I needed to buy guns. I knew it was irrational and impractical given my lack of training and urban lifestyle. Nonetheless I was convinced I needed them. When I told my girlfriend, her perplexed response made me realize how deeply my mind had been manipulated by my pro-Trump Facebook feeds.

Facebook’s account bans have dominated the news lately, but its advertising algorithm has received far less scrutiny.

Ad revenue is the lifeblood of platforms like Facebook and Twitter, and ads from companies peddling military-style gear are key to creating the hateful communities we see online today. To change the violent online world Facebook has created for “Trump’s Army” will require changing the algorithms themselves, the basic architecture of Facebook’s advertising – a market that is projected to bring the company nearly $100bn in the coming year.

There are fixes, but they are not about banning things. Facebook already bans ads for weapons, ammunition and explosives. Extending the ban to tactical gear permanently just opens a can of worms. How do you decide what is tactical and what is not? It would be just another attempt to treat the symptom rather than cure the disease itself.

Better than banning more things would be to revisit how the algorithm makes its suggestions, and come up with a solution that provides more avenues out of the rabbit hole than in. Tweaking algorithms so that they still serve up variety and diversity is just one idea. If they are going to monopolize people’s media consumption, they should have to serve up some common ground.

But maybe we can be more ambitious and try to create tech that builds society. Can our algorithms privilege love over hate? Can we make equations that appeal to our humanity rather than preying on our fears?

The Biden administration needs to establish a taskforce to tackle the issue. There is a long history of regulating the media in the public interest. If we let social media companies like Facebook continue to accelerate division, democracy is just going to bleed out.

Igor Vamos is a film-maker and activist known for his work with the Yes Men. He is also a professor of media art at Rensselaer Polytechnic Institute